How to implement AI governance best practices in 2025

Organizations worldwide are rapidly integrating artificial intelligence into their operations, fundamentally transforming how teams work and make decisions. This technological shift brings unprecedented opportunities for productivity and innovation, but also introduces complex challenges around ethics, compliance, and risk management.

As AI systems become more sophisticated and autonomous, the need for robust governance frameworks has never been more critical. Workers using generative AI reported saving only 5.4% of their work hours, translating to just a 1.1% increase in overall productivity when factoring in all workers. Without proper oversight, AI implementations can lead to biased outcomes, regulatory violations, data breaches, and erosion of stakeholder trust — consequences that can devastate both reputation and bottom line.

The most successful enterprises recognize that AI governance isn't just about risk mitigation; it's about creating sustainable competitive advantage through responsible innovation. By establishing clear principles, processes, and accountability structures, organizations can harness AI's transformative power while maintaining the trust of employees, customers, and regulators.

What is AI governance?

AI governance encompasses the comprehensive frameworks, policies, and practices that guide how organizations develop, deploy, and manage artificial intelligence systems. At its core, it establishes the rules of engagement for AI technologies — ensuring they operate ethically, transparently, and in alignment with both organizational values and regulatory requirements.

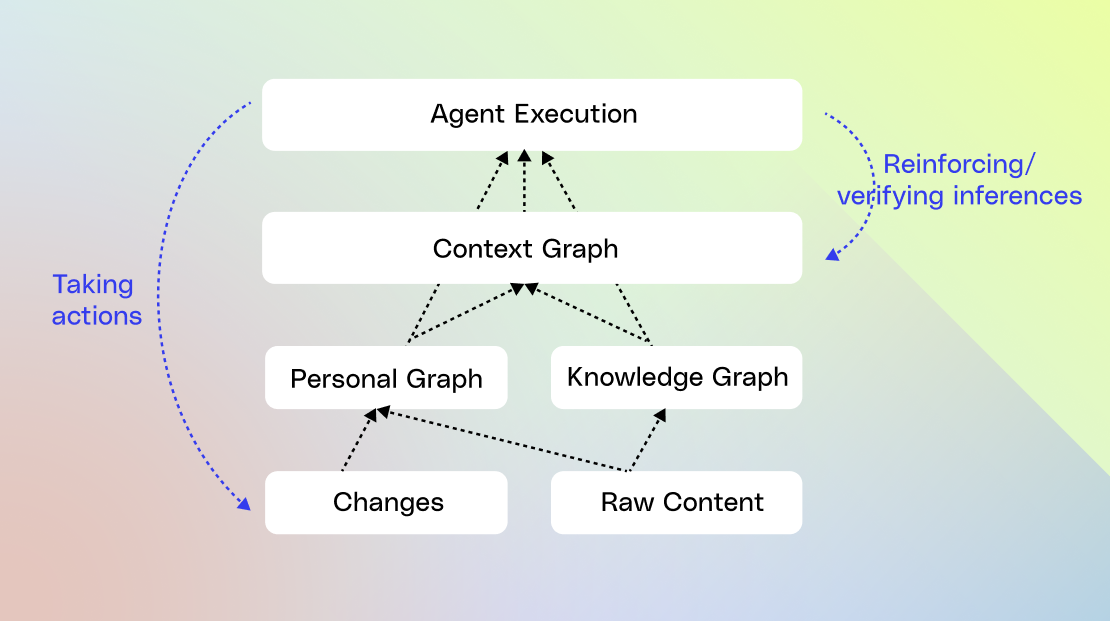

Think of AI governance as the operational blueprint that transforms high-level ethical principles into concrete actions. It addresses fundamental questions: Who has access to AI systems? How are decisions documented and explained? What safeguards prevent discriminatory outcomes? These frameworks create accountability structures that ensure human oversight remains central, even as AI systems handle increasingly complex tasks.

The scope of AI governance extends across the entire AI lifecycle, from initial design through deployment and ongoing monitoring. It encompasses several critical dimensions:

For enterprise teams in engineering, customer service, and HR, effective AI governance translates abstract principles into practical guardrails. It determines how a support team's AI assistant handles sensitive customer data, guides an engineering team's use of code generation tools, and shapes how HR departments deploy AI in recruitment processes. All AI hiring models award significantly higher scores to female candidates (0.45 points higher) while penalizing black male candidates (0.30 point penalty), even when qualifications are identical. Without these frameworks, organizations risk creating "black box" systems that operate without transparency or accountability — a recipe for legal liability, ethical breaches, and loss of stakeholder trust.

For enterprise teams in engineering, customer service, and HR, effective AI governance translates abstract principles into practical guardrails. It determines how a support team's AI assistant handles sensitive customer data, guides an engineering team's use of code generation tools, and shapes how HR departments deploy AI in recruitment processes. When developers are allowed to use AI tools, they take 19% longer to complete issues, a significant slowdown that goes against developer beliefs and expert forecasts. Without these frameworks, organizations risk creating "black box" systems that operate without transparency or accountability — a recipe for legal liability, ethical breaches, and loss of stakeholder trust.

How to implement AI governance best practices

Implementing AI governance best practices requires a strategic approach that balances innovation with responsibility. Start by identifying the critical elements of a governance structure. This involves establishing principles that align with your organization’s mission and focus on ethical AI deployment.

Step 1: Develop a comprehensive AI governance framework

Building a robust AI governance framework is essential for guiding ethical AI deployment. This framework should articulate principles that are in harmony with your organization's mission, ensuring AI activities support strategic goals. By establishing these guidelines, you create a cohesive approach to managing the ethical and operational boundaries of AI systems.

Key components of an AI governance framework

Emphasize Information Security: Protecting sensitive information is fundamental to any governance framework. Implement comprehensive data security measures to ensure compliance with applicable laws. There was a 202% increase in phishing emails in the second half of 2024, with 82.6% of phishing emails now using AI technology and 78% of people opening AI-generated phishing emails. By emphasizing information security, you shield your organization from potential legal and reputational challenges.

Promote Clarity and Responsibility: Clarity involves making AI operations transparent and open to stakeholders. Implement structures that clearly outline decision-making processes and assign responsibility. This openness not only builds trust but also aids in meeting compliance obligations.

Emphasize Information Security: Protecting sensitive information is fundamental to any governance framework. Implement comprehensive data security measures to ensure compliance with applicable laws. By emphasizing information security, you shield your organization from potential legal and reputational challenges.

Step 2: Ensure compliance with AI governance standards

Ensuring compliance with AI governance standards demands meticulous attention to data handling and regulatory adherence. Implement a permissions-aware framework that prioritizes precise data management and access protocols. By defining clear guidelines for data interaction, organizations can safeguard against unauthorized access and maintain data integrity.

Establishing a permissions-aware framework

Implement Role-Based Access: Assign access rights based on specific user roles, ensuring that data is handled by authorized personnel only. Utilize advanced verification measures to enhance security and reduce the risk of unauthorized access.

Conduct Regular Evaluations: Systematically assess access permissions to confirm alignment with organizational policies. Implement checks to ensure compliance and address any discrepancies swiftly, thereby strengthening security measures.

Seamless Integration: Align the permissions framework with existing infrastructure, allowing for consistent security application across all systems. This alignment enhances operational coherence and supports comprehensive compliance efforts.

Adapting to regulatory changes

Proactive Monitoring: Keep updated on regulatory developments to ensure ongoing compliance. This involves actively tracking changes from relevant authorities and incorporating them into governance practices. Anticipating regulatory shifts enables timely policy adjustments.

Dynamic Policy Review: Regularly revise governance policies to incorporate new regulatory requirements. This includes updating documentation and training team members on the latest standards, ensuring that practices remain compliant and effective.

Engage Compliance Experts: Work closely with legal and compliance teams to interpret regulatory changes accurately. Their insights ensure that governance practices not only meet but also exceed compliance expectations, safeguarding against potential risks.

By keeping governance frameworks adaptable and aligned with regulatory standards, organizations can leverage AI responsibly while maintaining trust and compliance.

Step 3: Implement AI risk management

Effective AI risk management involves a proactive approach to addressing potential ethical and security challenges in AI systems. Establishing a structured risk management process allows organizations to navigate these complexities confidently, ensuring systems are robust and reliable.

Conducting thorough evaluations

Map Out Risks: Begin by identifying areas where AI systems may face ethical dilemmas or security threats. Consider data handling, algorithmic fairness, and compliance with industry standards. This comprehensive mapping provides a clear overview of potential vulnerabilities.

Prioritize and Analyze: Assess the severity and frequency of each identified risk. This prioritization helps allocate resources effectively, focusing on the most pressing concerns that require immediate attention.

Craft Strategic Responses: Develop tailored strategies to manage each risk. This includes preventive measures and adaptive responses to minimize potential impacts, ensuring resilience in the face of challenges.

Utilizing audits for assurance

Structured Audits: Implement regular audits to verify that AI operations comply with ethical guidelines and accountability requirements. These audits serve as checkpoints to confirm that systems function as intended.

Dynamic Oversight: Establish mechanisms for continuous oversight, allowing for real-time detection and correction of deviations. This dynamic approach ensures ongoing compliance and ethical integrity.

Collaborative Input: Involve a diverse range of stakeholders in the audit process to gain comprehensive insights. Their perspectives can reveal additional areas for improvement and enhance risk management strategies.

Through a well-defined risk management framework, organizations can uphold ethical standards and fortify AI systems against potential threats, reinforcing stakeholder trust and system reliability.

Step 4: Foster AI transparency and accountability

Ensuring openness and responsibility in AI systems reinforces trust and integrity. Organizations must implement strategies that make AI operations and decisions clear to stakeholders, enhancing both understanding and confidence in these technologies.

Establishing comprehensive records

Integrated Documentation: Develop a cohesive documentation strategy that captures AI processes and decision criteria. This integration ensures that all relevant information is systematically recorded and easily retrievable.

Transparent Decision Logs: Create detailed logs that track AI decision-making pathways, explaining the logic and data inputs involved. These logs should be concise and provide clarity on how outcomes are reached.

Information Accessibility: Make documentation available through user-friendly platforms, enabling quick access and facilitating informed discussions about AI functionalities.

Promoting a culture of clarity

Facilitated Discussions: Encourage open forums where team members can discuss AI systems and their implications. This fosters a collaborative environment that values honest communication and feedback.

Engaging Stakeholders: Involve diverse stakeholder groups in AI planning and evaluation, drawing on their insights to improve system transparency and effectiveness.

Foundation of Principles: Create a curriculum that emphasizes core AI values such as impartiality, responsibility, and openness. These values should steer all AI-related activities, ensuring that ethical considerations are integral to decision-making. This is increasingly urgent as 68% of companies face a moderate to extreme AI talent shortage, with a projected 700,000 AI job shortage by 2027 despite AI specialists receiving a 56% salary premium.

By adopting these practices, organizations can create an environment where AI transparency and accountability are prioritized, ensuring alignment with ethical standards and organizational goals.

Step 5: Establish AI ethics and training programs

Developing a strong AI ethics framework is crucial for guiding responsible technology use across an organization. This involves not only defining ethical guidelines but also ensuring that all employees understand and adhere to them. By integrating ethics into training programs, organizations foster a culture of responsibility and awareness.

Building a comprehensive ethics curriculum

Foundation of Principles: Create a curriculum that emphasizes core AI values such as impartiality, responsibility, and openness. These values should steer all AI-related activities, ensuring that ethical considerations are integral to decision-making.

Real-World Applications: Use examples and scenarios that employees might encounter. This practical approach helps them identify ethical challenges and apply principles effectively in their roles.

Engaging Formats: Use interactive sessions and workshops to actively involve employees. This approach encourages dialogue and reflection, deepening their understanding of AI ethics.

Certification and ongoing education

Certification Programs: Launch certification initiatives that confirm employees' grasp of AI ethics and governance. These programs offer recognition for competency and commitment to ethical practices.

Continuous Learning: Implement ongoing educational opportunities to keep employees informed about the latest in AI ethics and governance. Regular updates ensure alignment with evolving standards and practices.

Feedback Channels: Establish methods for employees to share feedback on training programs. This input helps refine and improve the curriculum to better meet organizational needs.

By embedding ethics into training and certification programs, organizations can nurture an environment where ethical AI use is a collective responsibility, enhancing trust and accountability across all levels.

Tips on implementing AI governance

Implementing effective AI governance requires a strategic framework that emphasizes adaptability, stakeholder involvement, and proactive adjustments. This approach ensures that AI initiatives are not only innovative but also aligned with evolving business landscapes.

Align AI initiatives with organizational vision

Visionary Integration: Position AI governance as a core element of your organization's future vision. This ensures that AI projects contribute to long-term goals and are embedded within the company's strategic evolution. By integrating AI into the organizational vision, you create pathways for sustainable advancements.

Outcome Evaluation: Develop robust evaluation methods to measure AI’s impact on achieving organizational milestones. This approach provides valuable insights into how AI initiatives contribute to broader business success. Despite $30 to 40 billion in enterprise GenAI investment, a stunning 95% of organizations are achieving zero measurable return.

Prioritization Strategy: Focus efforts on AI projects that align with critical business opportunities and challenges. This strategic prioritization ensures that resources are directed towards initiatives with the highest potential for meaningful impact.

Foster cross-departmental engagement

Unified Collaboration: Encourage collaboration across departments to integrate diverse insights into AI governance. By fostering a culture of shared knowledge, you enhance the effectiveness of governance practices and decision-making.

Role Clarity: Clearly define roles and responsibilities for everyone involved in AI projects. This clarity ensures coordinated efforts and seamless integration of AI into various functions.

Continuous Feedback Loops: Establish ongoing channels for feedback that enable continuous refinement of AI strategies. This dynamic interaction empowers stakeholders to contribute insights, fostering an environment of collective growth and accountability.

By adopting these strategies, organizations can create a dynamic AI governance framework that supports adaptability, innovation, and sustained progress, ensuring a cohesive approach to AI implementation.

As you build your AI governance framework, remember that successful implementation requires both the right principles and the right technology foundation. We understand that navigating AI governance can be complex, which is why we're committed to helping organizations deploy AI responsibly and effectively. Request a demo to explore how Glean and AI can transform your workplace and discover how we can support your journey toward secure, ethical, and impactful AI adoption.